Cloudera Security Overview

As a system designed to support vast amounts and types of data, Cloudera clusters must meet ever-evolving security requirements imposed by regulating agencies, governments, industries, and the general public. Cloudera clusters comprise both Hadoop core and ecosystem components, all of which must be protected from a variety of threats to ensure the confidentiality, integrity, and availability of all the cluster's services and data. This overview provides introductions to:

Security Requirements

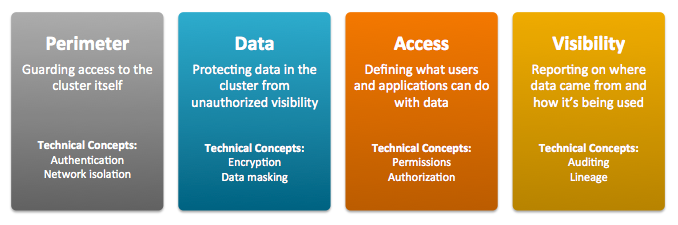

Goals for data management systems, such as confidentiality, integrity, and availability, require that the system be secured across several dimensions. These can be characterized in terms

of both general operational goals and technical concepts, as shown in the figure below:

- Perimeter Access to the cluster must be protected from a variety of threats coming from internal and external networks and from a variety of actors. Network isolation can be provided by proper configuration of firewalls, routers, subnets, and the proper use of public and private IP addresses, for example. Authentication mechanisms ensure that people, processes, and applications properly identify themselves to the cluster and prove they are who they say they are, before gaining access to the cluster.

- Data Data in the cluster must always be protected from unauthorized exposure. Similarly, communications between the nodes in the cluster must be protected. Encryption mechanisms ensure that even if network packets are intercepted or hard-disk drives are physically removed from the system by bad actors, the contents are not usable.

- Access Access to any specific service or item of data within the cluster must be specifically granted. Authorization mechanisms ensure that once users have authenticated themselves to the cluster, they can only see the data and use the processes to which they have been granted specific permission.

- Visibility Visibility means that the history of data changes is transparent and capable of meeting data governance policies. Auditing mechanisms ensure that all actions on data and its lineage—source, changes over time, and so on—are documented as they occur.

Securing the cluster to meet specific organizational goals involves using security features inherent to the Hadoop ecosystem as well as using external security infrastructure. The various security mechanisms can be applied in a range of levels.

Security Levels

The figure below shows the range of security levels that can be implemented for a Cloudera cluster, from non-secure (0) to most secure (3). As the sensitivity and volume of data on the

cluster increases, so should the security level you choose for the cluster.

With level 3 security, your Cloudera cluster is ready for full compliance with various industry and regulatory mandates and is ready for audit when necessary. The table below describes the levels in

more detail:

| Level | Security | Characteristics |

|---|---|---|

| 0 | Non-secure | No security configured. Non-secure clusters should never be used in production environments because they are vulnerable to any and all attacks and exploits. |

| 1 | Minimal | Configured for authentication, authorization, and auditing. Authentication is first configured to ensure that users and services can access the cluster only after proving their identities. Next, authorization mechanisms are applied to assign privileges to users and user groups. Auditing procedures keep track of who accesses the cluster (and how). |

| 2 | More | Sensitive data is encrypted. Key management systems handle encryption keys. Auditing has been setup for data in metastores. System metadata is reviewed and updated regularly. Ideally, cluster has been setup so that lineage for any data object can be traced (data governance). |

| 3 | Most | The secure enterprise data hub (EDH) is one in which all data, both data-at-rest and data-in-transit, is encrypted and the key management system is fault-tolerant. Auditing mechanisms comply with industry, government, and regulatory standards (PCI, HIPAA, NIST, for example), and extend from the EDH to the other systems that integrate with it. Cluster administrators are well-trained, security procedures have been certified by an expert, and the cluster can pass technical review. |

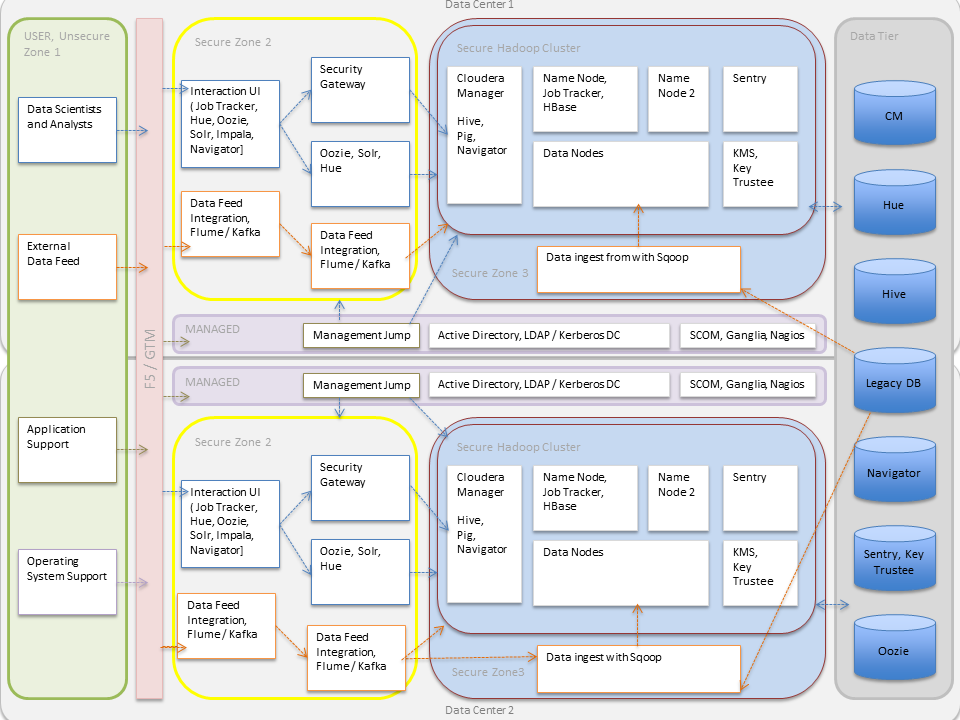

Hadoop Security Architecture

- External data streams are authenticated by mechanisms in place for Flume and Kafka. Data from legacy databases is ingested using Sqoop. Data scientists and BI analysts can use interfaces such as Hue to work with data on Impala or Hive, for example, to create and submit jobs. Kerberos authentication can be leveraged to protect all these interactions.

- Encryption can be applied to data at-rest using transparent HDFS encryption with an enterprise-grade Key Trustee Server. Cloudera also recommends using Navigator Encrypt to protect data on a cluster associated with the Cloudera Manager, Cloudera Navigator, Hive and HBase metastores, and any log files or spills.

- Authorization policies can be enforced using Sentry (for services such as Hive, Impala, and Search) as well as HDFS Access Control Lists.

- Auditing capabilities can be provided by using Cloudera Navigator.

Continue reading:

| << Cloudera Security | ©2016 Cloudera, Inc. All rights reserved | Authentication Overview >> |

| Terms and Conditions Privacy Policy |