Upgrading CDH

Minimum Required Role: Cluster Administrator (also provided by Full Administrator)

- Parcels – This option uses Cloudera Manager to upgrade CDH and allows you to upgrade your cluster either via a full restart or a rolling restart if you have HDFS high availability enabled and have a Cloudera Enterprise license. The use of Parcels requires that your cluster be managed by Cloudera Manager. This includes any components that are not a regular part of CDH, such as Spark 2.

- Packages – This option is the most time consuming and requires you to log in using ssh

and execute a series of package commands on all hosts in your cluster. Cloudera recommends that you instead upgrade your cluster using parcels, which allows Cloudera Manager to

distribute the upgraded software to all hosts in the cluster without having to log in to each host. If you installed the cluster using packages, you can upgrade using parcels and the cluster will use

parcels for subsequent upgrades.

If the CDH cluster you are upgrading was installed using packages, you can upgrade it using parcels, and the upgraded version of CDH will then use parcels for future upgrades or changes. You can migrate your cluster from using packages to using parcels before starting the upgrade.

The minor version of Cloudera Manager you use to perform the upgrade must be equal to or greater than the CDH minor version. To upgrade Cloudera Manager, see Overview of Upgrading Cloudera Manager.

Fill in the following form to create a customized set of instructions for your CDH upgrade:

Loading Filters ...

Important:

Important:

- Collection information about your environment.

- From the Cloudera Manager Admin Console

- The version of Cloudera Manager. Go to .

- The version of the JDK deployed. Go to .

- The version of CDH and whether the cluster was installed using parcels or packages. It is displayed next to the cluster name on the Home page.

- The services enabled in your cluster.

Go to .

- Whether HDFS High Availability is enabled.

Go to Clusters click HDFS Service, click Actions menu. It is enabled if you see an menu item Disable High Availability.

- Any content not applicable to your environment will not be shown.

- Rollback of your software and data is not supported.

- Do not use this procedure to upgrade a Key Trustee Server Cluster. Follow this procedure instead.

- When HDFS High Availability is enabled and you have a Cloudera Enterprise license, and CDH was installed using parcels, and you are doing a minor or maintenance upgrade, you can rolling restart the services without taking the entire cluster offline.

Note: When upgrading CDH using Rolling Restart:

Note: When upgrading CDH using Rolling Restart:

- Automatic failover does not affect the rolling restart operation. If you have JobTracker high availability configured, Cloudera Manager will fail over the JobTracker during the rolling restart, but configuring JobTracker high availability is not a requirement for performing a rolling upgrade.q

- After the upgrade has completed, do not remove the old parcels if there are MapReduce or Spark jobs currently running. These jobs still use the old parcels and must be restarted in order to use the newly upgraded parcel.

- Ensure that Oozie jobs are idempotent.

- Do not use Oozie Shell Actions to run Hadoop-related commands.

- Rolling upgrade of Spark Streaming jobs is not supported. Restart the streaming job once the upgrade is complete, so that the newly deployed version starts being used.

- Runtime libraries must be packaged as part of the Spark application.

- You must use the distributed cache to propagate the job configuration files from the client gateway machines.

- Do not build "uber" or "fat" JAR files that contain third-party dependencies or CDH classes as these can conflict with the classes that Yarn, Oozie, and other services automatically add to the CLASSPATH.

- Build your Spark applications without bundling CDH JARs.

Note: If you are upgrading to a maintenance

version of CDH, skip any steps that are labeled

Note: If you are upgrading to a maintenance

version of CDH, skip any steps that are labeled

[Not required for CDH maintenance release upgrades.].

The version numbers for maintenance releases differ only in the third digit, for example when upgrading from CDH 5.8.0 to CDH 5.8.2. See Maintenance Version Upgrades.

Continue reading:

- Before You Begin

- Enter Maintenance Mode

- Back Up HDFS Metadata

- Back Up HDFS Metadata

- Back Up Databases

- Establish Access to the Software

- Stop the Cluster

- Install CDH Packages

- Run the Upgrade Wizard

- Remove the Previous CDH Version Packages and Refresh Symlinks

- Finalize the HDFS Metadata Upgrade

- Finalize the HDFS Metadata Upgrade

- Finalize HDFS Rolling Upgrade

- Finalize HDFS Rolling Upgrade

- Exit Maintenance Mode

Before You Begin

- You must have SSH access to the Cloudera Manager server hosts and be able to login using the root account or an account that has password-less sudo permission to all the hosts.

- Review the Requirements and Supported Versions for the new versions you are upgrading to.

- Ensure that Java 1.7 or 1.8 is installed across the cluster. For installation instructions and recommendations, see Java Development Kit Installation or Upgrading to Oracle JDK 1.8.

- Review the CDH 5 Release Notes.

- Read the Incompatible Changes.

- Read the Known Issues.

- Read the Issues Fixed.

- Review the Cloudera Security Bulletins.

- Review the upgrade procedure and reserve a maintenance window with enough time allotted to perform all steps. For production clusters, Cloudera recommends allocating up to a full day maintenance window to perform the upgrade, depending on the number of hosts, the amount of experience you have with Hadoop and Linux, and the particular hardware you are using.

- If you are upgrading from CDH 5.1 or lower, and use Hive Date partition columns, you may need to update the date format. See Date partition columns.

- If the cluster uses Impala, check your SQL against the newest reserved words listed in incompatible changes. If upgrading across multiple versions, or in case of any problems, check against the full list of Impala keywords.

- Run the Security Inspector and fix any reported errors.

Go to .

- Log in to any cluster node as the hdfs user, run the following commands, and correct any reported errors:

hdfs fsck /

Note: the fsck command may take 10 minutes or more to complete,

depending on the number of files in your cluster.

Note: the fsck command may take 10 minutes or more to complete,

depending on the number of files in your cluster.hdfs dfsadmin -report

See HDFS Commands Guide in the Apache Hadoop documentation. - Log in to any DataNode as the hbase user, run the following command, and correct any reported errors:

hbase hbck

See Checking and Repairing HBase Tables. - If you have configured Hue to use TLS/SSL and you are upgrading from CDH 5.2 or lower to CDH 5.3 or higher, Hue validates CA certificates and requires a truststore. To create a truststore, follow the instructions in Hue as a TLS/SSL Client.

- If your cluster uses the Flume Kafka client, and you are upgrading to CDH 5.8.0 or CDH 5.8.1, perform the extra steps described in Upgrading to CDH 5.8.0 or CDH 5.8.1 When Using the Flume Kafka Client and then continue with the procedures in this topic.

- If your cluster uses Impala and Llama, this role has been deprecated as of CDH 5.9 and you must remove

the role from the Impala service before starting the upgrade. If you do not remove this role, the upgrade wizard will halt the upgrade.

To determine if Impala uses Llama:

- Go to the Impala service.

- Select the Instances tab.

- Examine the list of roles in the Role Type column. If Llama appears, the Impala service is using Llama.

To remove the Llama role:- Go to the Impala service and select .

The Disable YARN and Impala Integrated Resource Management wizard displays.

- Click Continue.

The Disable YARN and Impala Integrated Resource Management Command page displays the progress of the commands to disable the role.

- When the commands have completed, click Finish.

- If your cluster uses Sentry, and are upgrading from CDH 5.12 or lower, you may need to increase the Java heap memory for Sentry. See Performance Guidelines.

Enter Maintenance Mode

To avoid unnecessary alerts during the upgrade process, enter maintenance mode on your cluster before you start the upgrade. This stops email alerts and SNMP traps from being sent, but does not stop checks and configuration validations. Be sure to exit maintenance mode when you have finished the upgrade to reenable Cloudera Manager alerts. More Information.

On the Home > Status tab, click  next to the cluster name and select Enter Maintenance Mode.

next to the cluster name and select Enter Maintenance Mode.

Back Up HDFS Metadata

[Not required for CDH maintenance release upgrades.]

- CDH 5.0 or 5.1 to 5.2 or higher

- CDH 5.2 or 5.3 to 5.4 or higher

Back up HDFS metadata using the following command:

hdfs dfsadmin -fetchImage myImageName

Back Up HDFS Metadata

[Not required for CDH maintenance release upgrades.]

- CDH 5.0 or 5.1 to 5.2 or higher

- CDH 5.2 or 5.3 to 5.4 or higher

Back up HDFS metadata using the following command:

hdfs dfsadmin -fetchImage myImageName

Back Up Databases

Gather the following information:

- Type of database (PostgreSQL, Embedded PostgreSQL, MySQL, MariaDB, or Oracle)

- Hostnames of the databases

- Credentials for the databases

- Sqoop, Oozie, and Hue – Go to .

- Hive Metastore – Go to the Hive service, select Configuration, and select the Hive Metastore Database category.

- Sentry – Go to the Sentry service, select Configuration, and select the Sentry Server Database category.

To back up the databases

- If not already stopped, stop the service.

- On the tab, click

to the right of the service name and

select Stop.

to the right of the service name and

select Stop. - Click Stop in the next screen to confirm. When you see a Finished status, the service has stopped.

- On the tab, click

- Back up the database.

- MySQL

-

mysqldump --databases database_name --host=database_hostname --port=database_port -u database_username -p > $HOME/database_name-backup-`date +%F`.sql

- PostgreSQL/Embedded

-

pg_dump -h database_hostname -U database_username -W -p database_port database_name > $HOME/database_name-backup-`date +%F`.sql

- Oracle

- Work with your database administrator to ensure databases are properly backed up.

- Start the service.

- On the tab, click

to the right of the service name and

select Start.

to the right of the service name and

select Start. - Click Start that appears in the next screen to confirm. When you see a Finished status, the service has started.

- On the tab, click

Note: Backing up databases requires that you stop some services, which may make them unavailable

during backup.

Note: Backing up databases requires that you stop some services, which may make them unavailable

during backup.Establish Access to the Software

Package Repository URL:

- SSH into each host in the cluster.

-

- Redhat / CentOS

-

Create a file named cloudera_cdh.repo with the following content:

[cdh] # Packages for CDH name=CDH baseurl=https://archive.cloudera.com/cdh5/redhat/7/x86_64/cdh/5.15 gpgkey=https://archive.cloudera.com/cdh5/redhat/7/x86_64/cdh/RPM-GPG-KEY-cloudera gpgcheck=1 - SLES

-

Create a file named cloudera_cdh.repo with the following content:

[cdh] # Packages for CDH name=CDH baseurl=https://archive.cloudera.com/cdh5/sles/12/x86_64/cdh/5.15 gpgkey=https://archive.cloudera.com/cdh5/sles/12/x86_64/cm/RPM-GPG-KEY-cloudera gpgcheck=1 - Debian / Ubuntu

-

Create a file named cloudera_cdh.list with the following content:

# Packages for CDH deb https://archive.cloudera.com/cdh5/debian/jessie/amd64/cdh/ jessie-cdh5.15 contrib deb-src https://archive.cloudera.com/cdh5/debian/jessie/amd64/cdh/ jessie-cdh5.15 contrib

The repository file, as created, specifies an upgrade to the most recent maintenance release of the specified minor release. If you would like to upgrade to an specific maintenance version, for example 5.15.1, replace 5.15 with 5.15.1 in the generated repository file shown above.

- Backup the existing repository directory.

- Redhat / CentOS

-

sudo cp -rf /etc/yum.repos.d $HOME/yum.repos.d-`date +%F`

- SLES

-

sudo cp -rf /etc/zypp/repos.d $HOME/repos.d-`date +%F`

- Debian / Ubuntu

-

sudo cp -rf /etc/apt/sources.list.d $HOME/sources.list.d-`date +%F`

- Remove any older files in the existing repository directory:

- Redhat / CentOS

-

sudo rm /etc/yum.repos.d/cloudera*cdh.repo*

- SLES

-

sudo rm /etc/zypp/repos.d/cloudera*cdh.repo*

- Debian / Ubuntu

-

sudo rm /etc/apt/sources.list.d/cloudera*cdh.list*

- Copy the repository file created above to the repository directory:

- Redhat / CentOS

-

sudo cp cloudera_cdh.repo /etc/yum.repos.d/

- SLES

-

sudo cp cloudera_cdh.repo /etc/zypp/repos.d/

- Debian / Ubuntu

-

sudo cp cloudera_cdh.list /etc/apt/sources.list.d/

Stop the Cluster

- Stop the cluster before proceed with upgrading CDH using packages.

Install CDH Packages

- SSH into each host in the cluster.

-

- Redhat / CentOS

-

sudo yum clean all sudo yum install avro-tools crunch flume-ng hadoop-hdfs-fuse hadoop-httpfs hadoop-kms hbase hbase-solr hive-hbase hive-webhcat hue-beeswax hue-hbase hue-impala hue-pig hue-plugins hue-rdbms hue-search hue-spark hue-sqoop hue-zookeeper impala impala-shell kite llama mahout oozie parquet pig pig-udf-datafu search sentry solr solr-mapreduce spark-python sqoop sqoop2 whirr zookeeper - SLES

-

sudo zypper clean --all sudo zypper install avro-tools crunch flume-ng hadoop-hdfs-fuse hadoop-httpfs hadoop-kms hbase hbase-solr hive-hbase hive-webhcat hue-beeswax hue-hbase hue-impala hue-pig hue-plugins hue-rdbms hue-search hue-spark hue-sqoop hue-zookeeper impala impala-shell kite llama mahout oozie parquet pig pig-udf-datafu search sentry solr solr-mapreduce spark-python sqoop sqoop2 whirr zookeeper - Debian / Ubuntu

-

sudo apt-get update sudo apt-get install avro-tools crunch flume-ng hadoop-hdfs-fuse hadoop-httpfs hadoop-kms hbase hbase-solr hive-hbase hive-webhcat hue-beeswax hue-hbase hue-impala hue-pig hue-plugins hue-rdbms hue-search hue-spark hue-sqoop hue-zookeeper impala impala-shell kite llama mahout oozie parquet pig pig-udf-datafu search sentry solr solr-mapreduce spark-python sqoop sqoop2 whirr zookeeper

- Restart the Cloudera Manager Agent.

- Redhat 7, SLES 12, Debian 8, Ubuntu 16.04

-

sudo systemctl restart cloudera-scm-agent

You should see no response if there are no errors starting the agent. - Redhat 5 or 6, SLES 11, Debian 6 or 7, Ubuntu 12.04, 14.04

-

sudo service cloudera-scm-agent restart

You should see the following:Starting cloudera-scm-agent: [ OK ]

Run the Upgrade Wizard

- If your cluster has Kudu 1.4.0 or lower installed and you want to upgrade to CDH 5.13 or higher, deactivate the existing Kudu parcel. Starting with Kudu 1.5.0 / CDH 5.13, Kudu is part of the CDH parcel and does not need to be installed separately.

-

If your cluster has Spark 2.0 or Spark 2.1 installed and you want to upgrade to CDH 5.13 or higher, you must download and install Spark 2.1 release 2 or later.

To install these versions of Spark, do the following before running the CDH Upgrade Wizard:- Install the Custom Service Descriptor (CSD) file. See

- Installing Spark 2.1 OR

- Installing Spark 2.2

Note: Spark 2.2 requires that JDK 1.8 be deployed throughout the cluster. JDK 1.7 is not supported

for Spark 2.2.

Note: Spark 2.2 requires that JDK 1.8 be deployed throughout the cluster. JDK 1.7 is not supported

for Spark 2.2.

- Download, distribute, and activate the Parcel for the version of Spark that you are installing:

- Spark 2.1 release 2: The parcel name includes "cloudera2" in its name.

- Spark 2.2 release 1: The parcel name includes "cloudera1" in its name.

- Spark 2.2 release 2: The parcel name includes "cloudera2" in its name.

- Install the Custom Service Descriptor (CSD) file. See

- If your cluster has GPLEXTRAS installed, download and distribute the version of the GPLEXTRAS parcel that matches the version of CDH that you are upgrading to.

- From the tab, click

next to the cluster name and select Upgrade Cluster.

next to the cluster name and select Upgrade Cluster. - If the option to pick between packages and parcels displays, select Use Parcels.

The Getting Started page of the upgrade wizard displays and lists the available versions of CDH that are available for upgrade. If no qualifying parcels are listed, or you want to upgrade to a different version of CDH:

- Click the Remote Parcel Repository URLs link and add the appropriate parcel URL. See Parcel Configuration Settings for more information.

- Click the Cloudera Manager log to return to the Home page.

- From the tab, click

next to the cluster name and select

Upgrade Cluster.

next to the cluster name and select

Upgrade Cluster.

The Getting Started page of the upgrade wizard displays.

- Select the CDH version and download the parcels.

- Cloudera Manager 5.14 and lower:

- In the Choose CDH Version (Parcels) section, select the CDH version that you want to upgrade to.

- Click Continue.

A page displays the version you are upgrading to and asks you to confirm that you have completed some additional steps.

- Click Yes, I have performed these steps.

- Click Continue.

- Cloudera Manager verifies that the agents are responsive and that the correct software is installed. When you see the No Errors Found message, click

Continue.

The selected parcels are downloaded, distributed, and unpacked.

- Click Continue.

The Host Inspector runs. Examine the output and correct any reported errors.

- Cloudera Manager 5.15 and higher:

- In the Upgrade to CDH Version drop-down list, select the version of CDH you want to upgrade to.

The Upgrade Wizard performs some checks on configurations, health, and compatibility and reports the results. Fix any reported issues before continuing.

- Click Run Host Inspector.

The Host Inspector runs. Click Show Inspector Results to see the Host Inspector report (opens in a new browser tab).

- Read the notices for steps you must complete before upgrading, select Yes, I have performed theses steps. ... after completing the steps, and click

Continue.

The selected parcels are downloaded, distributed, and unpacked. The Continue button turns blue when this process finishes.

- In the Upgrade to CDH Version drop-down list, select the version of CDH you want to upgrade to.

- Cloudera Manager 5.14 and lower:

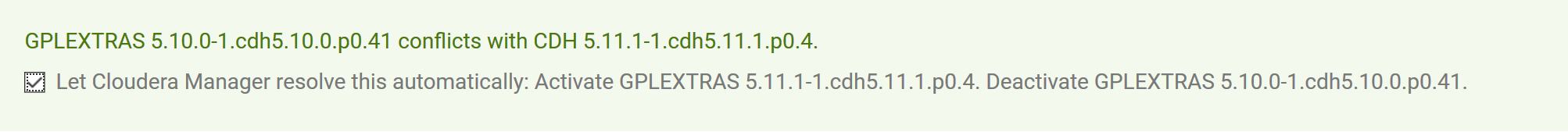

- If you downloaded a new version of the GPLEXTRAS parcel, the Upgrade Wizard displays a message that the GPLEXTRAS parcel conflicts with the version of the CDH

parcel, similar to the following:

Select the option to resolve the conflicts automatically.

Cloudera Manager deactivates the old version of the GPLEXTRAS parcel, activates the new version and verifies that all hosts have the correct software installed.

Cloudera Manager may also offer to resolve other parcels with conflicting versions.

Click Continue when the parcel is installed

- Click Continue.

The Choose Upgrade Procedure screen displays. Select the upgrade procedure from the following options:

- Rolling Restart

Cloudera Manager upgrades services and performs a rolling restart.

This option is only available if you have enabled high availability for HDFS and you are performing a minor/maintenance upgrade.

Services that do not support rolling restart undergo a normal restart, and are not available during the restart process.

Configure the following parameters for the rolling restart (optional):

Number of roles to include in a batch. Cloudera Manager restarts the worker roles rack-by-rack, in alphabetical order, and within each rack, hosts are restarted in alphabetical order. If you use the default replication factor of 3, Hadoop tries to keep the replicas on at least 2 different racks. So if you have multiple racks, you can use a higher batch size than the default 1. However, using a batch size that is too high means that fewer worker roles are active at any time during the upgrade, which can cause temporary performance degradation. If you are using a single rack, restart one worker node at a time to ensure data availability during upgrade.

Amount of time Cloudera Manager waits before starting the next batch. Applies only to services with worker roles.

The number of batch failures that cause the entire rolling restart to fail. For example if you have a very large cluster, you can use this option to allow some failures when you know that the cluster is functional when some worker roles are down.

- Full Cluster Restart

Cloudera Manager performs all service upgrades and restarts the cluster.

- Manual Upgrade

Cloudera Manager configures the cluster to the specified CDH version but performs no upgrades or service restarts. Manually upgrading is difficult and for advanced users only. Manual upgrades allow you to selectively stop and restart services to prevent or mitigate downtime for services or clusters where rolling restarts are not available.

To perform a manual upgrade: See Performing Upgrade Wizard Actions Manually for the required steps.

- Rolling Restart

- Click Continue.

The Upgrade Cluster Command screen displays the result of the commands run by the wizard as it shuts down all services, activates the new parcels, upgrades services, deploys client configuration files, and restarts services , and performs a rolling restart of the services that support it.

If any of the steps fail, correct any reported errors and click the Resume button. Cloudera Manager will skip restarting roles that have already successfully restarted. Alternatively, return to the tab and Performing Upgrade Wizard Actions Manually

Note: If Cloudera Manager detects a failure

while upgrading CDH, Cloudera Manager displays a dialog box where you can create a diagnostic bundle to send to Cloudera Support so they can help you recover from the failure. The cluster name and

time duration fields are pre-populated to capture the correct data.

Note: If Cloudera Manager detects a failure

while upgrading CDH, Cloudera Manager displays a dialog box where you can create a diagnostic bundle to send to Cloudera Support so they can help you recover from the failure. The cluster name and

time duration fields are pre-populated to capture the correct data. - Click Continue.

If your cluster was previously installed or upgraded using packages, the wizard may indicate that some services cannot start because their parcels are not available. To download the required parcels:

- In another browser tab, open the Cloudera Manager Admin Console.

- Select .

- Locate the row containing the missing parcel and click the button to Download, Distribute, and then Activate the parcel.

- Return to the upgrade wizard and click the Resume button.

The Upgrade Wizard continues upgrading the cluster.

- Click Finish to return to the Home page.

Remove the Previous CDH Version Packages and Refresh Symlinks

[Not required for CDH maintenance release upgrades.]

If your previous installation of CDH was done using packages, remove those packages on all hosts where you installed the parcels and refresh the symlinks so that clients will run the new software versions.

Skip this step if your previous installation or upgrade used parcels.

- If your Hue service uses the embedded SQLite database, back up /var/lib/hue/desktop.db to a location that is not /var/lib/hue because this directory is removed when the packages are removed.

-

Uninstall the CDH packages on each host:

Not including Impala and Search- Redhat / CentOS

-

sudo yum remove bigtop-utils bigtop-jsvc bigtop-tomcat hue-common sqoop2-client - SLES

-

sudo zypper remove bigtop-utils bigtop-jsvc bigtop-tomcat hue-common sqoop2-client - Debian / Ubuntu

-

sudo apt-get purge bigtop-utils bigtop-jsvc bigtop-tomcat hue-common sqoop2-client

- Redhat / CentOS

-

sudo yum remove 'bigtop-*' hue-common impala-shell solr-server sqoop2-client hbase-solr-doc avro-libs crunch-doc avro-doc solr-doc - SLES

-

sudo zypper remove 'bigtop-*' hue-common impala-shell solr-server sqoop2-client hbase-solr-doc avro-libs crunch-doc avro-doc solr-doc - Debian / Ubuntu

-

sudo apt-get purge 'bigtop-*' hue-common impala-shell solr-server sqoop2-client hbase-solr-doc avro-libs crunch-doc avro-doc solr-doc

- Restart all the Cloudera Manager Agents to force an update of the symlinks to point to the newly installed

components on each host:

sudo service cloudera-scm-agent restart

- If your Hue service uses the embedded SQLite database, restore the database you backed up:

- Stop the Hue service.

- Copy the backup from the temporary location to the newly created Hue database directory, /var/lib/hue.

- Start the Hue service.

Finalize the HDFS Metadata Upgrade

[Not required for CDH maintenance release upgrades.]

- CDH 5.0 or 5.1 to 5.2 or higher

- CDH 5.2 or 5.3 to 5.4 or higher

To determine if you can finalize, run important workloads and ensure that they are successful. Once you have finalized the upgrade, you cannot roll back to a previous version of HDFS without using backups. Verifying that you are ready to finalize the upgrade can take a long time.

- Deleting files does not free up disk space.

- Using the balancer causes all moved replicas to be duplicated.

- All on-disk data representing the NameNodes metadata is retained, which could more than double the amount of space required on the NameNode and JournalNode disks.

- Go to the HDFS service.

- Click the Instances tab.

- Select the NameNode instance. If you have enabled high availability for HDFS, select NameNode (Active).

- Select and click Finalize Metadata Upgrade to confirm.

Finalize the HDFS Metadata Upgrade

[Not required for CDH maintenance release upgrades.]

- CDH 5.0 or 5.1 to 5.2 or higher

- CDH 5.2 or 5.3 to 5.4 or higher

To determine if you can finalize, run important workloads and ensure that they are successful. Once you have finalized the upgrade, you cannot roll back to a previous version of HDFS without using backups. Verifying that you are ready to finalize the upgrade can take a long time.

- Deleting files does not free up disk space.

- Using the balancer causes all moved replicas to be duplicated.

- All on-disk data representing the NameNodes metadata is retained, which could more than double the amount of space required on the NameNode and JournalNode disks.

- Go to the HDFS service.

- Click the Instances tab.

- Select the NameNode instance. If you have enabled high availability for HDFS, select NameNode (Active).

- Select and click Finalize Metadata Upgrade to confirm.

Finalize HDFS Rolling Upgrade

[Not required for CDH maintenance release upgrades.]

- CDH 5.0 or 5.1 to 5.2 or higher

- CDH 5.2 or 5.3 to 5.4 or higher

To determine if you can finalize, run important workloads and ensure that they are successful. Once you have finalized the upgrade, you cannot roll back to a previous version of HDFS without using backups. Verifying that you are ready to finalize the upgrade can take a long time.

- Go to the HDFS service.

- Select and click Finalize Rolling Upgrade to confirm.

Finalize HDFS Rolling Upgrade

[Not required for CDH maintenance release upgrades.]

- CDH 5.0 or 5.1 to 5.2 or higher

- CDH 5.2 or 5.3 to 5.4 or higher

To determine if you can finalize, run important workloads and ensure that they are successful. Once you have finalized the upgrade, you cannot roll back to a previous version of HDFS without using backups. Verifying that you are ready to finalize the upgrade can take a long time.

- Go to the HDFS service.

- Select and click Finalize Rolling Upgrade to confirm.

Exit Maintenance Mode

If you entered maintenance mode during this upgrade, exit maintenance mode.

On the tab, click  next to the cluster name and select Exit Maintenance Mode.

next to the cluster name and select Exit Maintenance Mode.

| << Upgrading CDH Using Cloudera Manager | ©2016 Cloudera, Inc. All rights reserved | Troubleshooting a CDH Upgrade Using Cloudera Manager >> |

| Terms and Conditions Privacy Policy |